What Is Survivorship Bias? | Definition & Examples

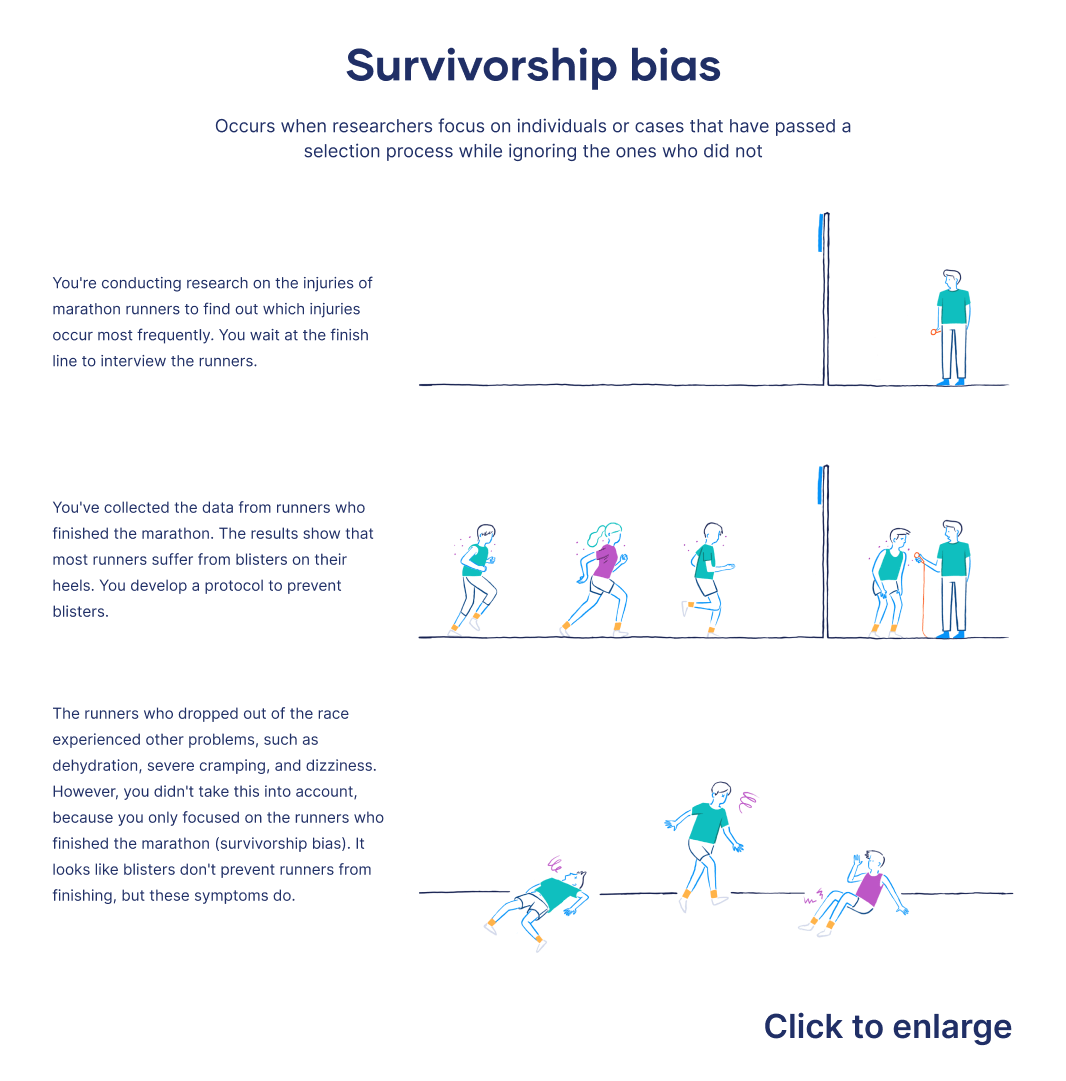

Survivorship bias occurs when researchers focus on individuals, groups, or cases that have passed some sort of selection process while ignoring those who did not. Survivorship bias can lead researchers to form incorrect conclusions due to only studying a subset of the population. Survivorship bias is a type of selection bias.

In addition to being a common form of research bias, survivorship bias can also lead to poor decision-making in other areas, such as finance, medicine, and business.

What is survivorship bias?

Survivorship bias is a form of selection bias. It occurs when a dataset only considers existing (or ‘surviving’) observations and fails to consider observations that have ceased to exist.

For example, when investigating the profitability of the tech industry, one has to also study businesses that went bankrupt, rather than only focusing on businesses currently in the market.

Focusing on a subset of your sample that has already passed some sort of selection process increases your chances of drawing incorrect conclusions. ‘Surviving’ observations may have survived exactly because they are more resilient to difficult conditions, while others have ceased to exist as a result of those same conditions.

However, an engineer realised that the worst-hit planes never came back. So the spots showing no damage on surviving planes were actually the worst parts to be hit. Planes hit there never came back, but because they only focused on data from the returning planes, researchers were initially misled by survivorship bias.

Why survivorship bias matters

When a study is affected by survivorship bias, we only pay attention to part of the data. This can have a number of consequences, such as:

- Overly optimistic conclusions that do not represent reality, leading us to think that circumstances are easier or more likely to work in our favor than they actually are. Studying only successful entrepreneurs or startups can have this effect.

- Misinterpretation of correlation, or seeing a cause-and-effect relationship where there isn’t one. High school or college dropouts, for example, become successful entrepreneurs despite leaving school, not because of it.

- Incomplete decision-making, missing out on important information about those who didn’t ‘survive’, such as businesses that failed despite seemingly fertile growth environments or hard-working founders.

Awareness of survivorship bias is important because it impacts our perception, judgment, and the quality of our conclusions.

Survivorship bias examples

Survivorship bias can cloud our judgment not only in research, but in everyday life, too.

This reflects the popular misconception that goods such as electric appliances, cars, or other equipment produced in previous decades surpass contemporary goods in quality.

However, this doesn’t take into account that only the sturdier items have survived into the present day. As such, we only see the best examples; the other products that have broken down in the meantime are not visible to us.

This leads us to the false impression that all goods produced in the past were built to last.

Relatedly, before drawing any conclusions, you need to ask yourself whether your dataset is truly complete. Otherwise, you are also at risk of survivorship bias.

This may lead you to think that it is probably a really good high school.

By doing so, you would mistake correlation for causation: the fact that the top three students went to the same high school may be a coincidence. The reason why they are excellent students is not necessarily because of their high school education.

To avoid survivorship bias and draw a conclusion about the quality of education offered in that high school, you would need more data from all the school’s students, and not just the ones who made it to the top three. For example, you could compare the average exam score of the school’s students to the county average.

How to prevent survivorship bias

Survivorship bias is a common logical error, but you can take several steps to avoid it:

- Consider what’s missing from your data, asking yourself: Is your dataset complete? What observations didn’t ‘survive’ from an event or selection procedure? Think of defunct businesses, school dropouts who didn’t become billionaires, or clinical trial participants who showed no improvement.

- Pick data sources crafted to ensure accuracy. Be careful not to omit observations that no longer exist and would change your analysis or conclusions. For example, also look for academic journals publishing negative study results.

- When cleaning your data, be mindful of outlier removal. You may accidentally remove critical information if you don’t understand what the outliers mean. It’s crucial to determine whether any extreme values are truly errors or instead represent something else. Experts on the subject matter of your research can help you with this, as they know the field best.

Being aware of survivorship bias, as well as being open and transparent about your assumptions, is the best strategy to prevent it.

Other types of research bias

Frequently asked questions about survivorship bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Nikolopoulou, K. (2024, January 12). What Is Survivorship Bias? | Definition & Examples. Scribbr. Retrieved 10 April 2025, from https://www.scribbr.co.uk/bias-in-research/survivorship-bias-explained/